Traces - issues, capture, storage, manipulation

Olivier Aubert - www.olivieraubert.net

Cours INFO5 - 15/11/2023

Summary

- Context: from physical to digital traces

- Traces for Learning Analytics

- Time-Series Databases

Context

Trace = sign of the past, inscription of a past event or process

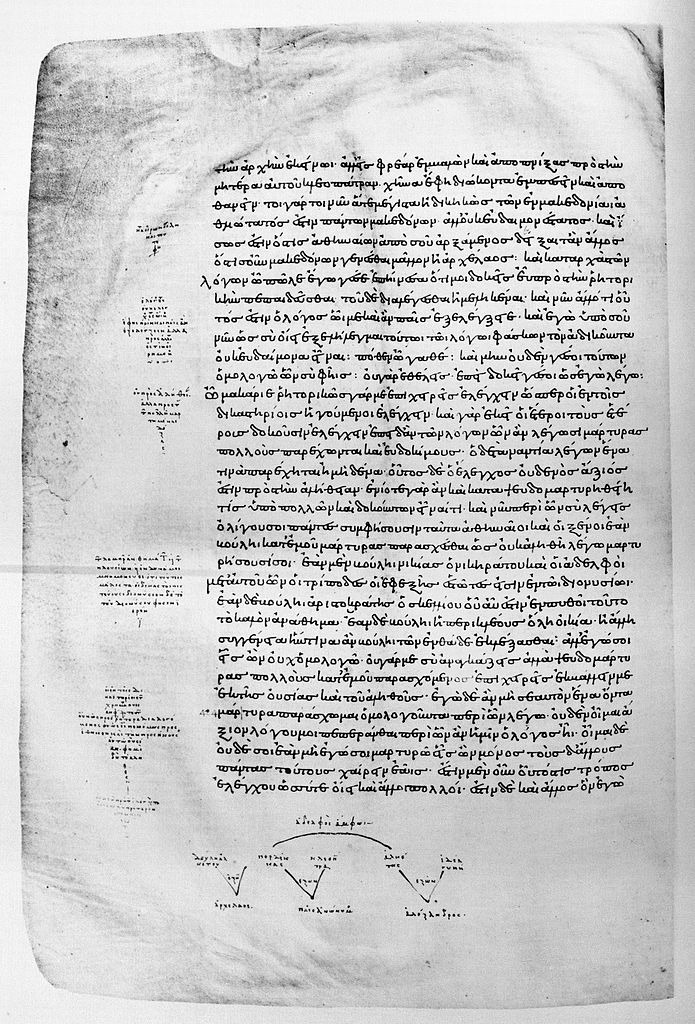

Marginalia

Annotation - trace of a scholarly reading activity

Page of the Codex Oxoniensis Clarkianus 39 (Clarke Plato). Dialogue Gorgias. Public Domain

Diaries

Weavings

Output from the performance "Le Déparleur" (Patrick Bernier et Olive Martin). Personal photograph - Olivier Aubert, CC BY-SA 4.0

Old representation

Other subjects

Physical paths

From physical to digital

Digital traces - sport

Digital traces

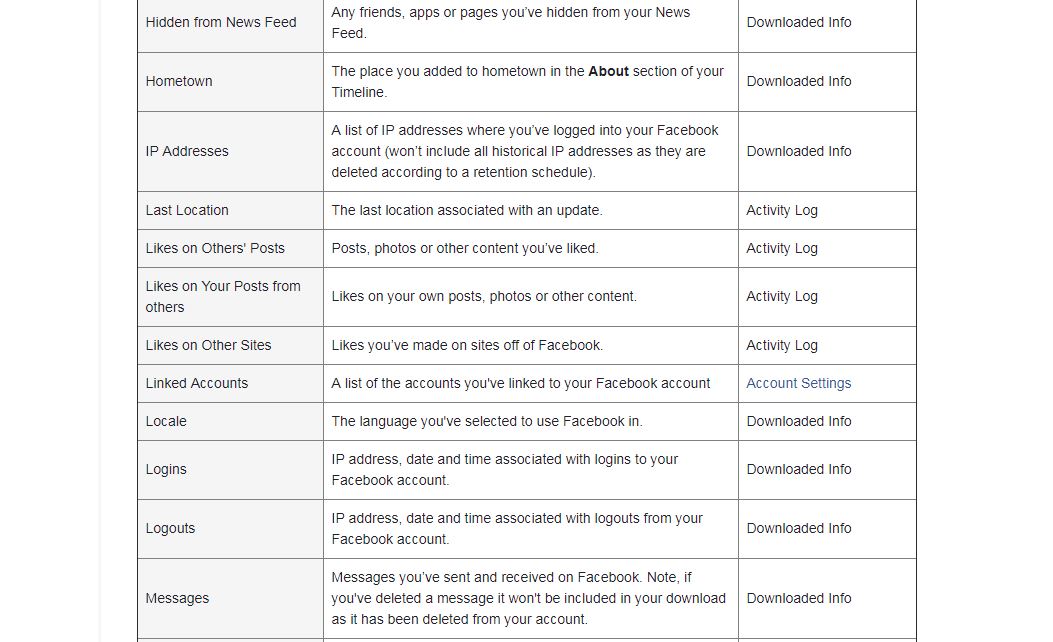

Social network traces Source

Social network traces Source

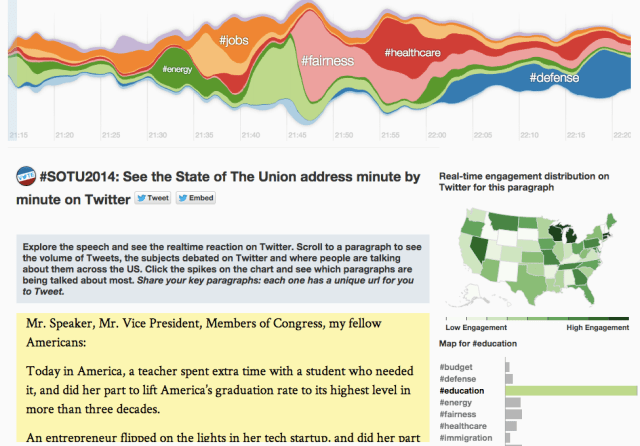

Digital traces - aggregation

Aggregated social network traces Source

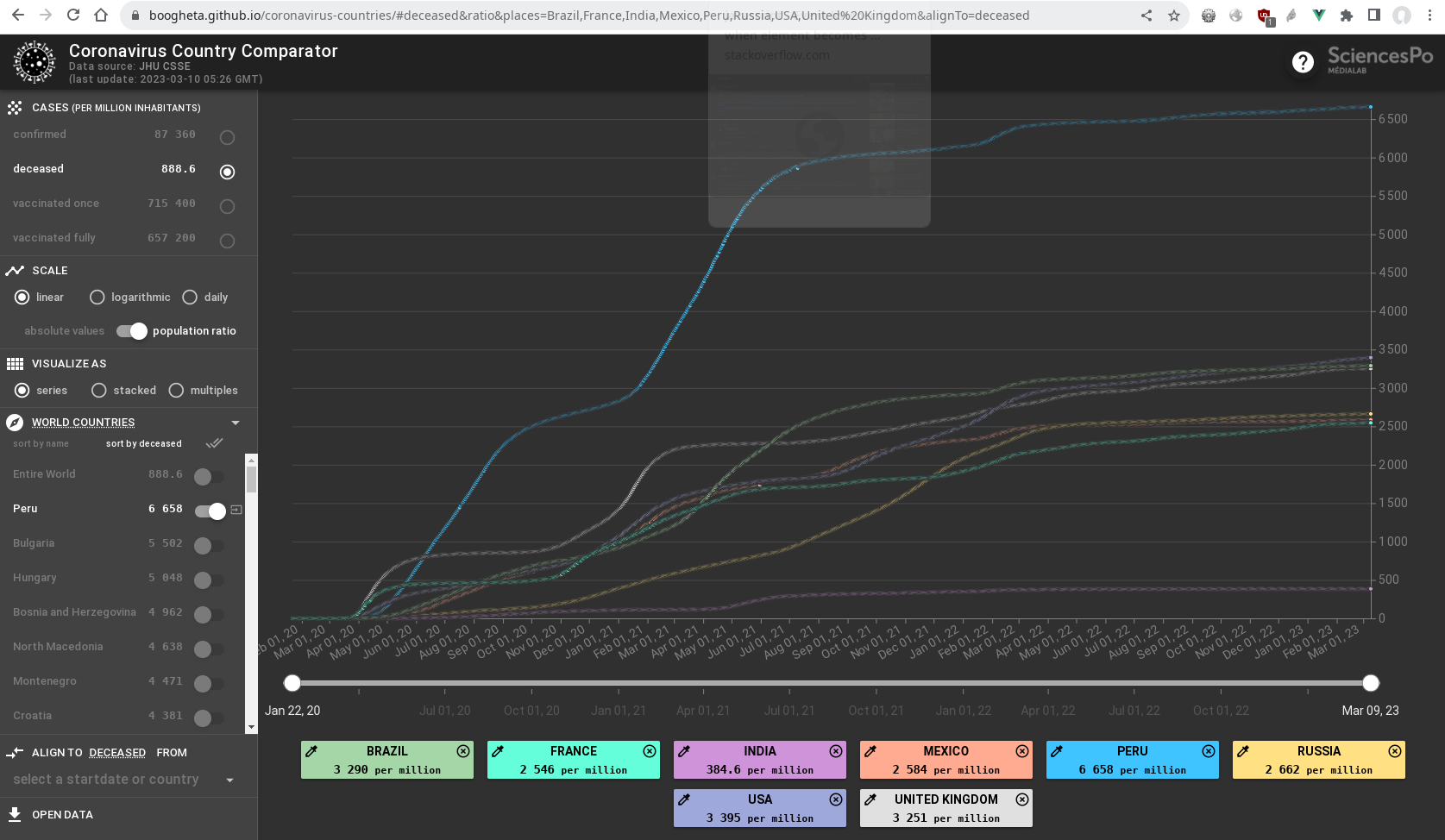

Digital traces - health

Coronavirus Country Comparator - Source

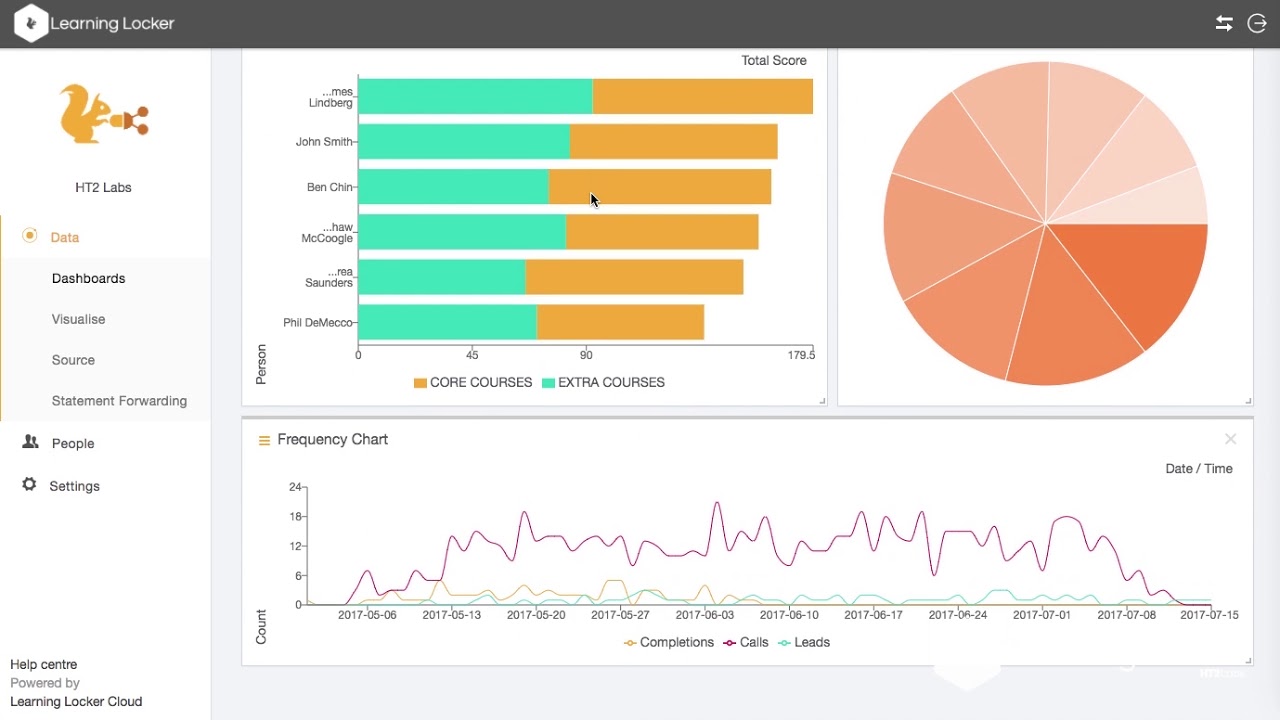

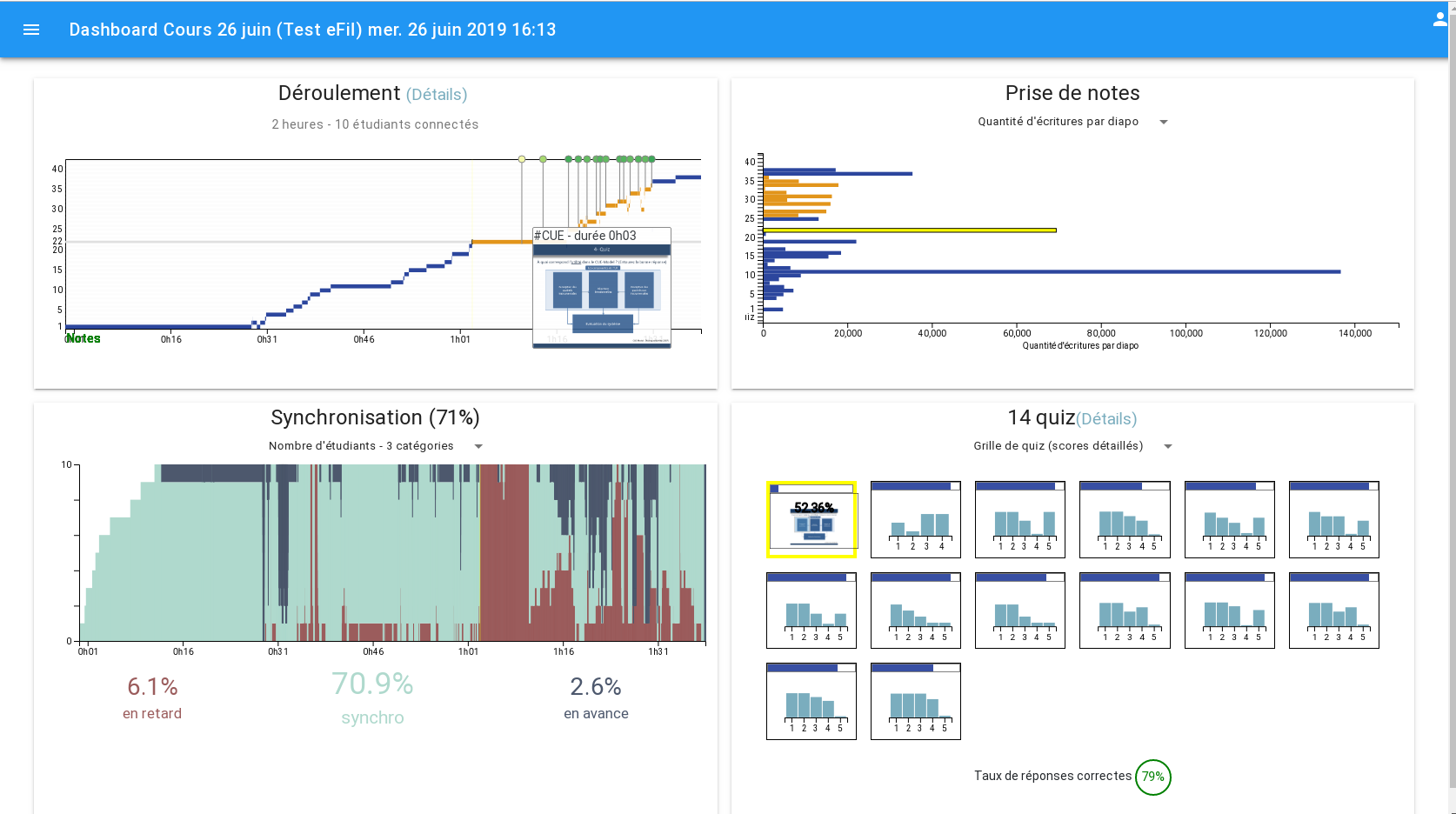

Digital traces - learning environments

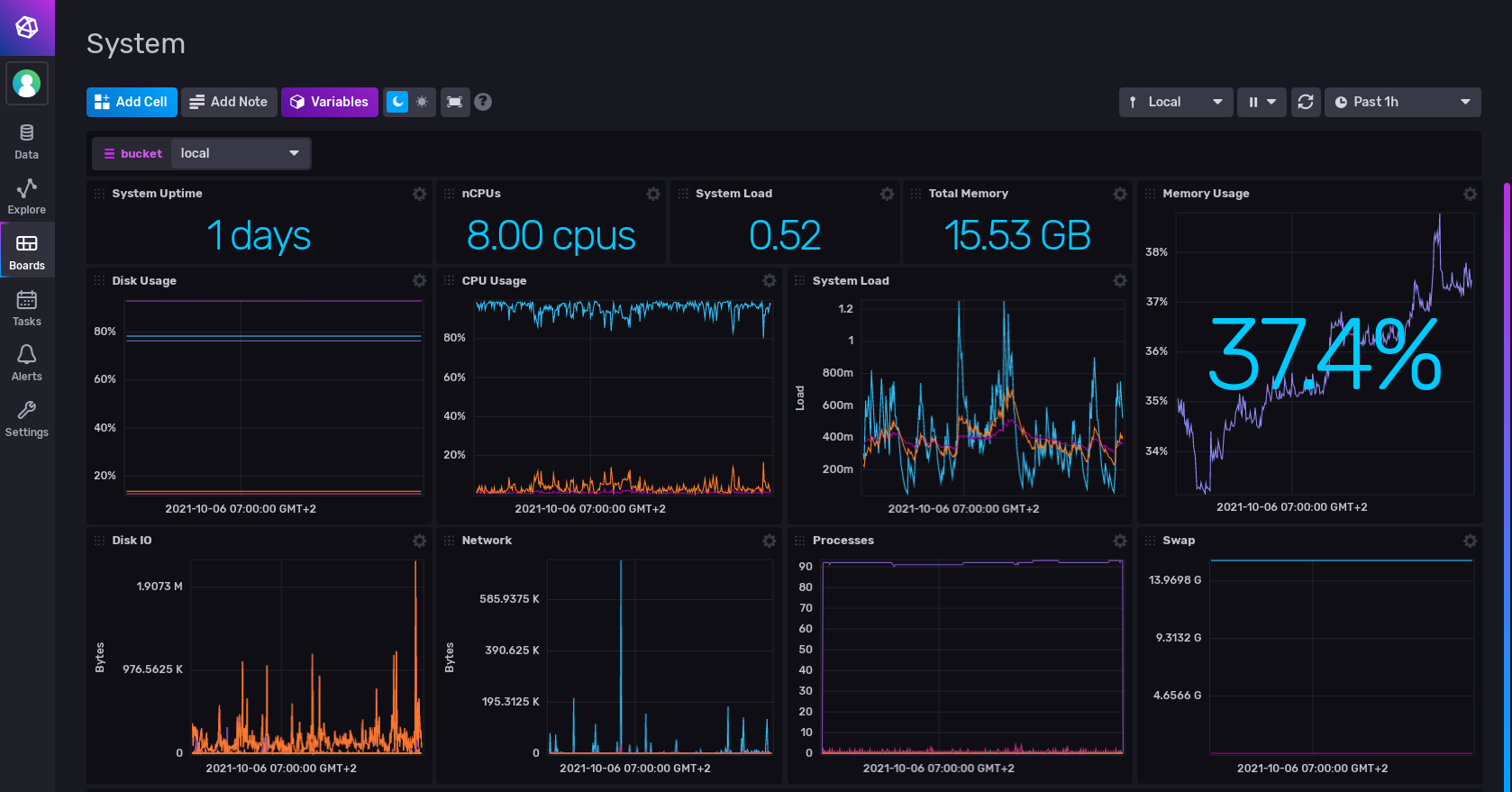

Learning Analytics dashboard Source

Digital traces - other subjects

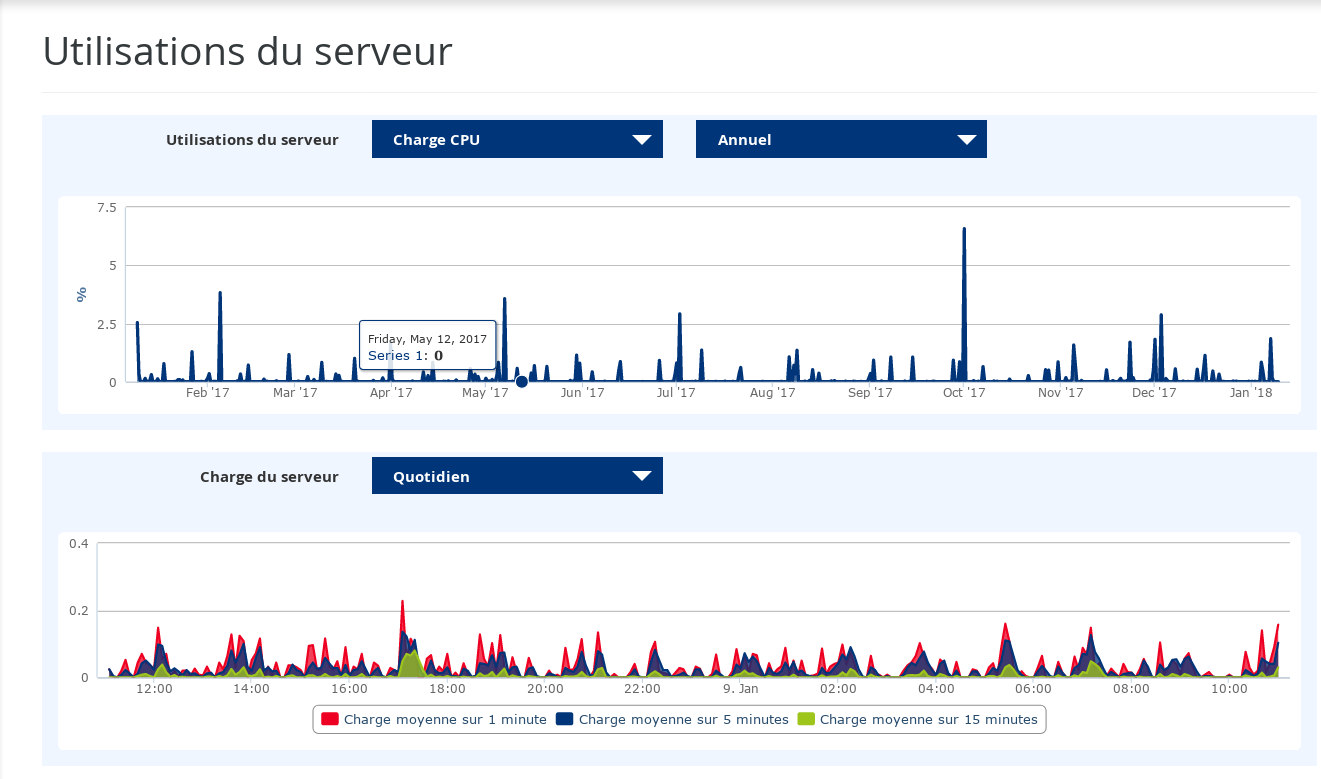

Access logs (offices, servers), sensor logs (health, linky, IOT…)

Common features of traces

- time dimension

- trace of a past activity

- there is a subject

- some data is collected

General issues

Issues in

- privacy/ethics

- capture

- storage

- visualisation

- manipulation/analysis

- interpretation

Digital traces

- Many things automatically trackable at a low cost

- Possible to add higher level/synthetic events/interpretations

- Beware of the semantic gap between what can be observed and what can be interpreted

- Beware of the bias and the illusion of completeness (full representation)

Variety of digital traces

Many types of traces. Here we will focus on:

- activity traces for learning analytics (xAPI)

- sensor data (time series)

but there are also

- e-mails

- chat logs

- revision control information

- website analytics

- server logs

- …

A word about ethics

- "Une grande responsabilité est la suite inséparable d'un grand pouvoir." (Plan de travail, de surveillance et de correspondance, Comité de Salut Public, 1793)

- Programmer’s responsibility/ethics

- What do you do if faced with the task of implementing illegal/unmoral software/processing?

User point of view

Wooclap survey time!

Ethics - Food for thought

- Good to think about it before the issue arises…

- Some reading ideas:

- (english) A Gift of Fire: Social, Legal, and Ethical Issues for Computing Technology

- (french) Manuel d’épistémologie pour l’ingénieur.e (disponible à la B.U.)

- (french) Quelle éthique pour l'ingénieur

- (french - video) L’éthique de l’ingénierie existe-t-elle ?

- A Framework for Ethical Decision Making

Some examples

- SpotterEDU (bluethooth beacons to track student attendance), with some resistance

- Awful AI is a curated list tracking current scary usages of AI - hoping to raise awareness to its misuses in society

- Mobile tracking

- In recent news/events:

Some user-side solutions

- Phone

- ExodusPrivacy

- TrackerControl

- Computer

- cookie/ad/js blocking

- but browser fingerprinting and server-side tracking (IP…)

- Real life

- video cameras

- access cards (bus, office…)

Regulation - GDPR / RGPD

General Data Protection Regulation (GDPR) / Règlement Général sur la Protection des Données (RGPD)

- Hypertextualized version

- Applies from May 25th, 2018

- Applies to any EU company, or any company processing data from EU citizens

- Fine up to 20 M€ or 4% of the annual worldwide turnover

- Applies to non-anonymized, personal data, with more constraints for sensitive data

GDPR principles 1/2

- Explicit consent required (with some exceptions) and transparent information in any case.

- Research exceptions

- Right of Access, Right of Erasure, Right of Objection

- Data portability

- Data Protection Officer as a reference/contact person

GDPR principles 2/2

- Responsibility and Accountability

- Privacy by Design and by default, data minimization

- Pseudonymisation encouraged

- Data breach 72h notification maximum delay

- Limited storage duration

Some GDPR application examples

- Amazon €746 million (2021) - targeted ad system not based on free consent

- WhatsApp €225 million (2021) - lack of transparent information

- Facebook €17 million (2022) - data breach notification

- Instagram €405 million (2022) - violation of children's privacy

- Facebook €1200 million (2023) - transfer of personal data to the US

And beyond fines

01/11/2023 : EDPB Urgent Binding Decision on processing of personal data for behavioural advertising by Meta

Meta interdit de faire de la publicité ciblée (french)

See EDPB website for more decisions

Learning analytics

Definition

Learning analytics is the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs. Source

Interdisciplinary domain (data science, pedagogy, social sciences)

Learning Analytics Lifecycle

Application domains

- analytics on interaction with the learning platforms

- analytics around social interactions

- analytics around learning content

- analytics in different spaces (digital/f2f)

Uses

- Real-time or asynchronous feedback

- Reflexivity

- Usage analysis

- Reporting

- Alerts

- Recommandation

- Document re/conception

- …

Data analytics levels

- Descriptive Analytics: What happened?

- Metric, summaries, visualisations…

- Diagnostic Analytics: Why it happened?

- Outliers, patterns, PCA, correlations…

- Predictive Analytics: What might happen if specific conditions occur?

- Probabilities, scenarios, predictive modelling…

- Prescriptive Analytics: Which actions are best?

- Simulations, recommandation engines…

Requirements for L.A.

Issue: traces are generated on a variety of platforms

- we need a common model/protocol

- able to accommodate a variety of scenarios

- we need to gather traces in a common place

- trace repositories (personal/shared) called LRS (Learning Record Stores)

- depending on the nature of the trace data, there may be other constraints (frequency, volume)

And again, ethics

As mentioned before, ethics is a consideration to always keep first in mind.

Models/Protocols

- ExperienceAPI

- xAPI (ex-TinCanAPI)

- SCORM successor

- much inspired by ActivityStreams W3C recommendation

- Caliper (IMS Global)

- another approach (more metrics-oriented)

Experience API evolution

- SCORM (2000) shortcomings

- need to be connected

- content must be imported and registered into the LMS before tracking

- LRS embedded in LMS

- Call for research for evolution in 2010

- TinCanAPI project, inspired by ActivityStreams

- v 1.0 released in 2013

Experience API data model

ExperienceAPI website - Reference spec

Activity events are recorded as Statements

Statement = (timestamp, actor, verb, object) [+ context] [+ result] [+ stored timestamp] [+ authority]

xAPI Statement example

{ "timestamp: "2023-10-15T11:30:02.598441+01:00",

"actor": { "name": "Olivier Aubert",

"mbox": "mailto:contact@olivieraubert.net" },

"verb": { "id": "https://activitystrea.ms/schema/1.0/present",

"display": { "en-US": "presented" } },

"object": { "id": "https://olivieraubert.net/cours/gcn_stockage_traces",

"definition": {

"name": "Traces - issues, capture, storage, manipulation",

"type": "https://adlnet.gov/expapi/activities/lesson"

}

"context": { "language": "fr",

"extensions": {

"https://www.polytech.univ-nantes.fr/xapi/polytechRoom": "D004"

}

},

"stored": "2023-10-12T11:30:03.954814+01:00",

}

Actor representation

Identified by at most ONE of mbox, mbox_sha1sum, openid, account (homepage, name)

{

"name": "Olivier Aubert",

"mbox": "mailto:contact@olivieraubert.net"

}

or

{ {

"name": "Olivier Aubert", "name": "Olivier Aubert",

"account": { "account": {

"homePage": "https://orcid.org/", "homePage": "https://madoc.univ-nantes.fr/",

"name": "0000-0001-8204-1567" "name": "aubert-o"

} }

} }

Actor/Group representation

Agent + specify objectType and member list.

{

mbox: "mailto:info@tincanapi.com",

name: "Info at TinCanAPI.com",

objectType: "Group",

member: [

{

mbox_sha1sum: "48010dcee68e9f9f4af7ff57569550e8b506a88d",

mbox_sha1sum: "9023723cde2d3efc5810dcee68e9f9f4af7ff575"

},

…

}

Verb representation

URI + display string

{

"id": "http://adlnet.gov/expapi/verbs/experienced",

"display": {

"en-US": "experienced"

}

}

From xAPI vocabulary & profile index (common vocabularies), many common ones come from ActivityStreams W3C recommendation.

In particular, CMI5 defines (not only) a standard profile for learning content.

CMI5 - xAPI profile

- xAPI is more generic than SCORM

- CMI5 is a xAPI profile that normalizes vocabularies as well as other aspects (content launch, score reporting)

- See https://xapi.com/cmi5/comparison-of-scorm-xapi-and-cmi5/

Object representation

Normally an activity, but can also be a person, group or even another statement.

{ "id": "https://olivieraubert.net/cours/gcn_stockage_traces",

"definition": {

"name": "Traces - issues, capture, storage, manipulation",

"type": "http://adlnet.gov/expapi/activities/lesson"

}

}

or

{ "objectType": "Agent",

"mbox":"mailto:test@example.com"

}

Context representation

Additional information about the activity context

"context": { "language": "fr",

"extensions": {

"http://www.polytech.univ-nantes.fr/xapi/polytechRoom": "D004"

}

}

Result representation

Representation of a measured outcome.

score(Object): score of the Agent in relation to success/quality of the experience.success(Boolean): Indicates whether or not the attempt on the Activity was successful.completion(Boolean): Indicates whether or not the Activity was completed.response(String): A response appropriately formatted for the given Activity.duration(String): Period of time over which the Statement occurred.

Extensions

object,contextandresultcan feature an “extensions” list- Custom vocabulary, key-value pairs where keys are URIs

"context": { "language": "fr",

"extensions": {

"http://www.polytech.univ-nantes.fr/xapi/polytechRoom": "D004"

}

}

xAPI protocols

4 REST APIs (last 3: Document APIs)

- Statement API: main API for statements

- State API: scratch space in which arbitrary information can be stored in the context of an activity, agent, and registration (per user/per activity).

- Agent API: additional data against a profile (group, settings…)

- Activity Profile API: additional data against an activity not specific to a user (collaboration activities, social interaction)

Example REST call(1/2)

Call (source)

POST https://v2.learninglocker.net/v1/data/xAPI/activities/state

URL Parameters

activityId:http://www.example.com/activities/1

stateId:http://www.example.com/states/1

agent:{“objectType”: “Agent”, “name”: “John Smith”,

“account”:{“name”: “123”,

“homePage”: “http://www.example.com/users/”}}

Example (2/2)

Headers

Authorization:Basic YOUR_BASIC_AUTH X-Experience-API-Version:1.0.0 Content-Type:application/json

Body

{

“favourite”: “It’s a Wonderful Life”,

“cheesiest”: “Mars Attacks”

}

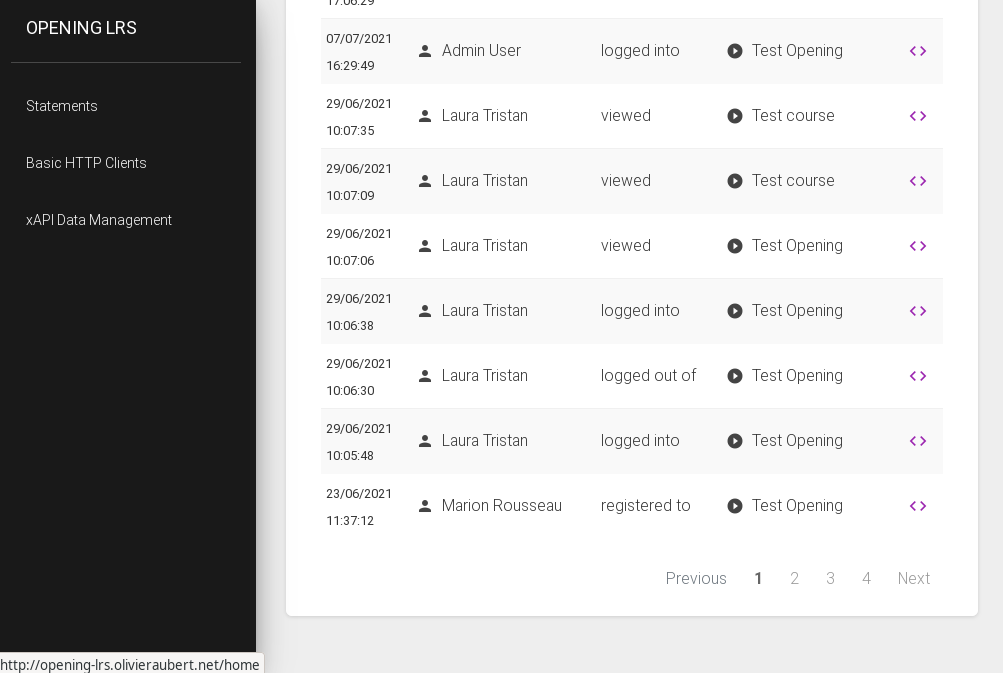

Trace repositories

- Learning Record Store

- personal or shared or integrated with the LMS

- Integrated to or external to LMS

- Available solutions: https://xapi.com/get-lrs/

- Some open-source solutions: Learning Locker, TraxLRS, SQLRS

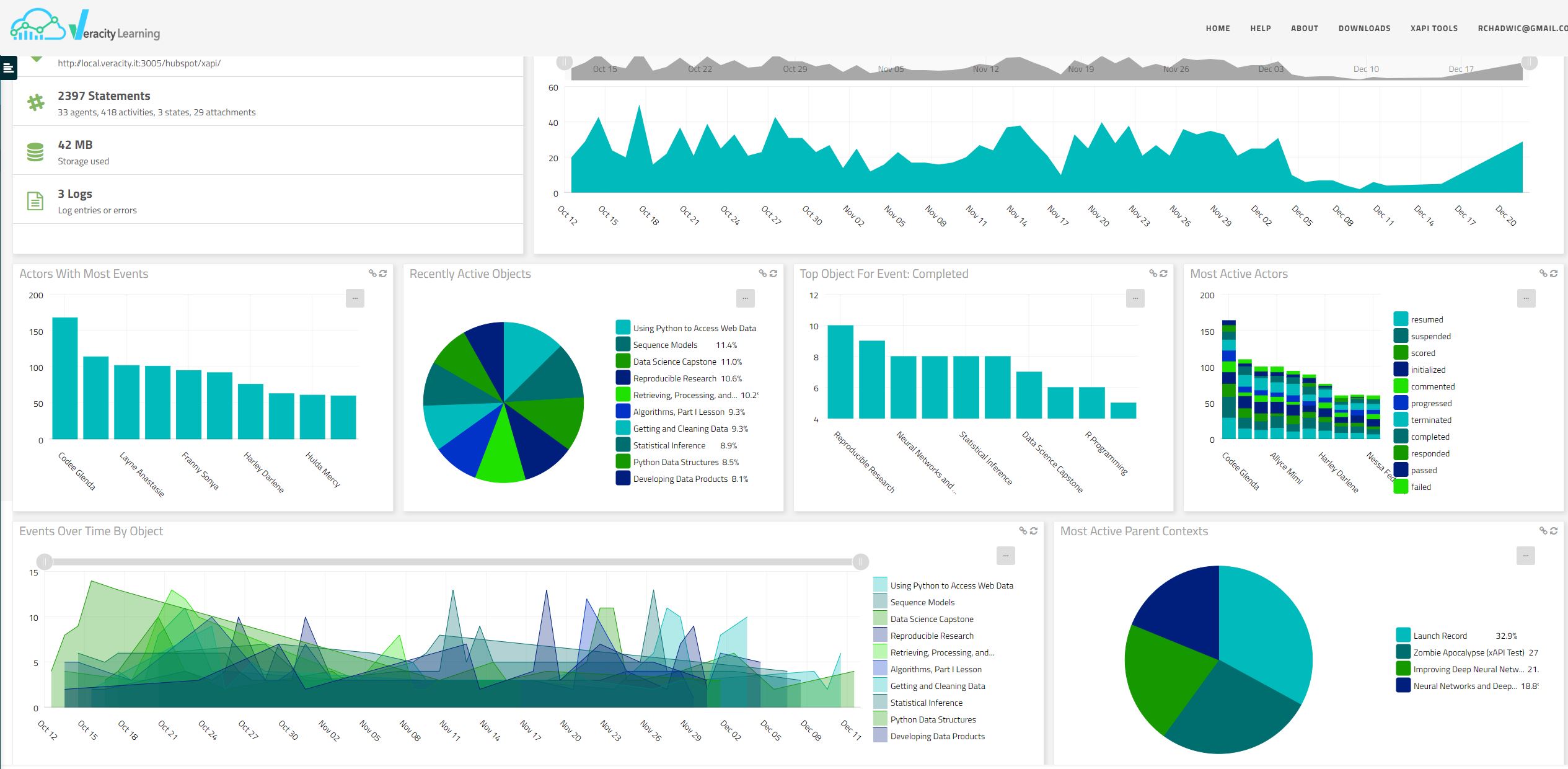

Example dashboard

From Veracity

From Veracity

Learning Locker

- Open-Source LRS

- Node.js / MongoDB based

- Features

- Trace storage

- Query builder

- Dashboard builder

TraxLRS

- Open-Source LRS

- php - MySQL/PostgresQL based

- Features

- Trace storage

- Requires additional components (like Kibana) for visualisation/analysis

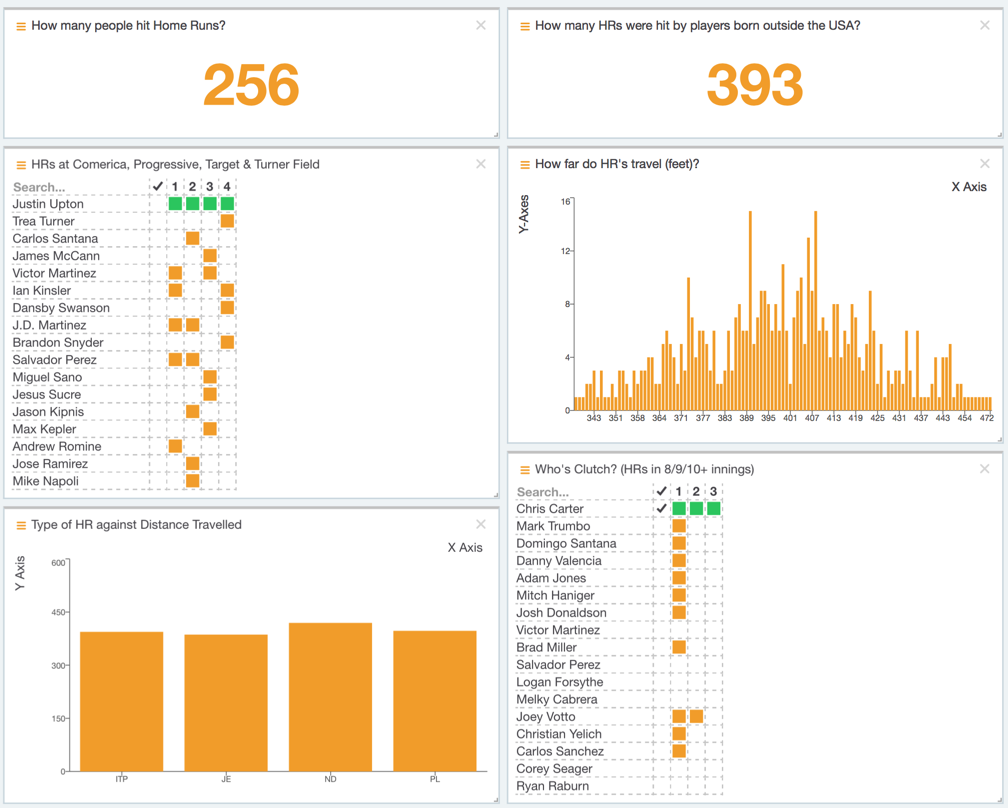

Example application

eFiL project

Source: eFiL

Time-series databases

Time Series Definition

A Time Series is

- a collection of observations or data points obtained by repeated measure over time

- measurements often happen in equal intervals

- measurement is well defined (who measures what)

- time is an illusion, any sequence can be seen as a time series

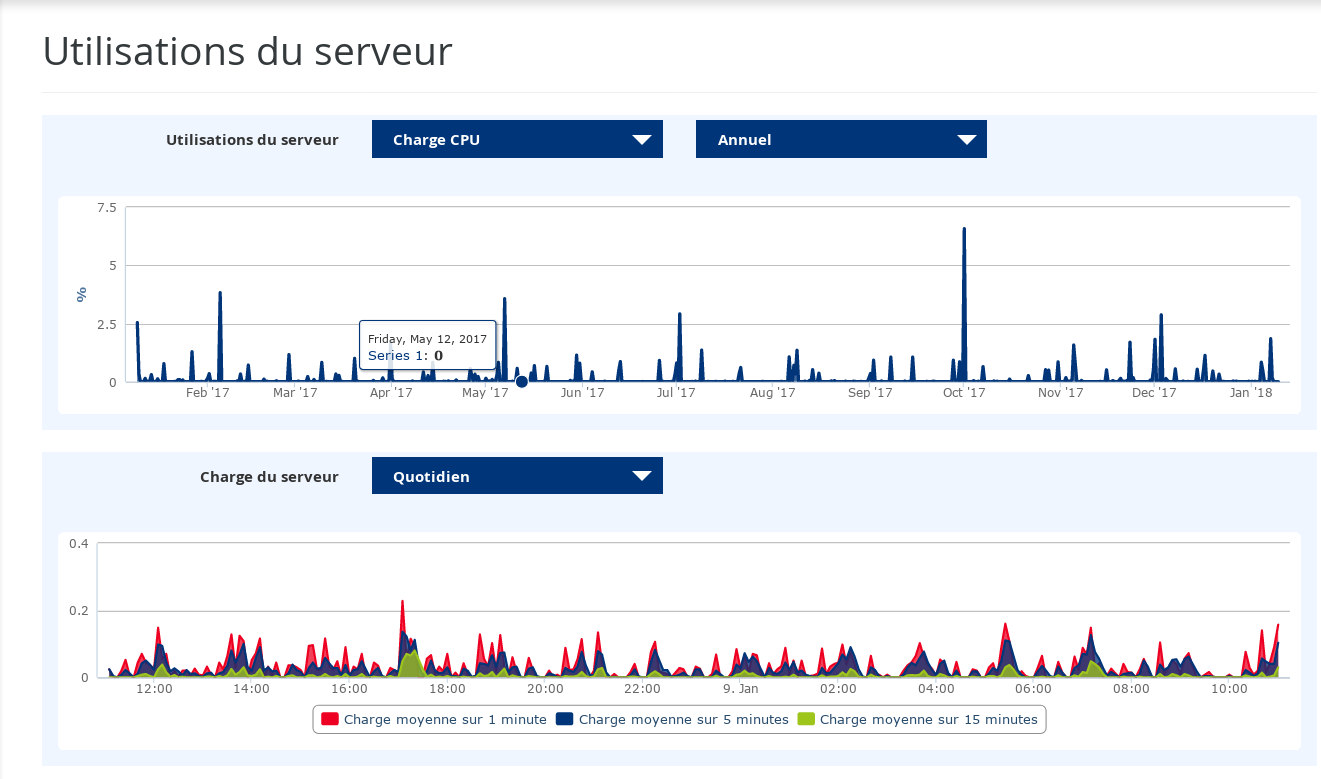

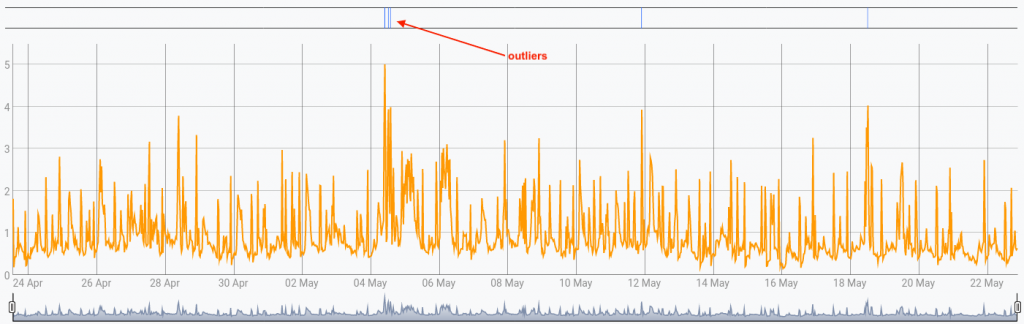

Use cases 1/2

- Computer system monitoring of various measures (processor, network

load, disk usage…) for

- Continuous monitoring

- Prediction for future possible events (storage limit, etc)

- Post-mortem analysis of event (failures mostly) causes

Use cases 2/2

- Finance

- Observing trends of stock prices

- Handling bank accounts history

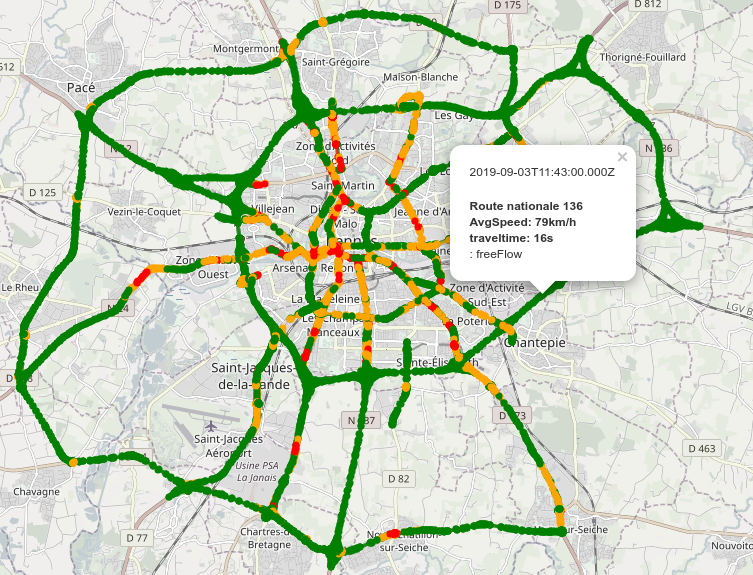

- IOT / Industry / Health / Aeronautics / Telecom / Agriculture / Defence / Smart City / Insurance…

- Storing/analysing sensor measures

- Continuous measures and evaluation

- Warning when measurements deviate from the norm

- Comparisons/trends

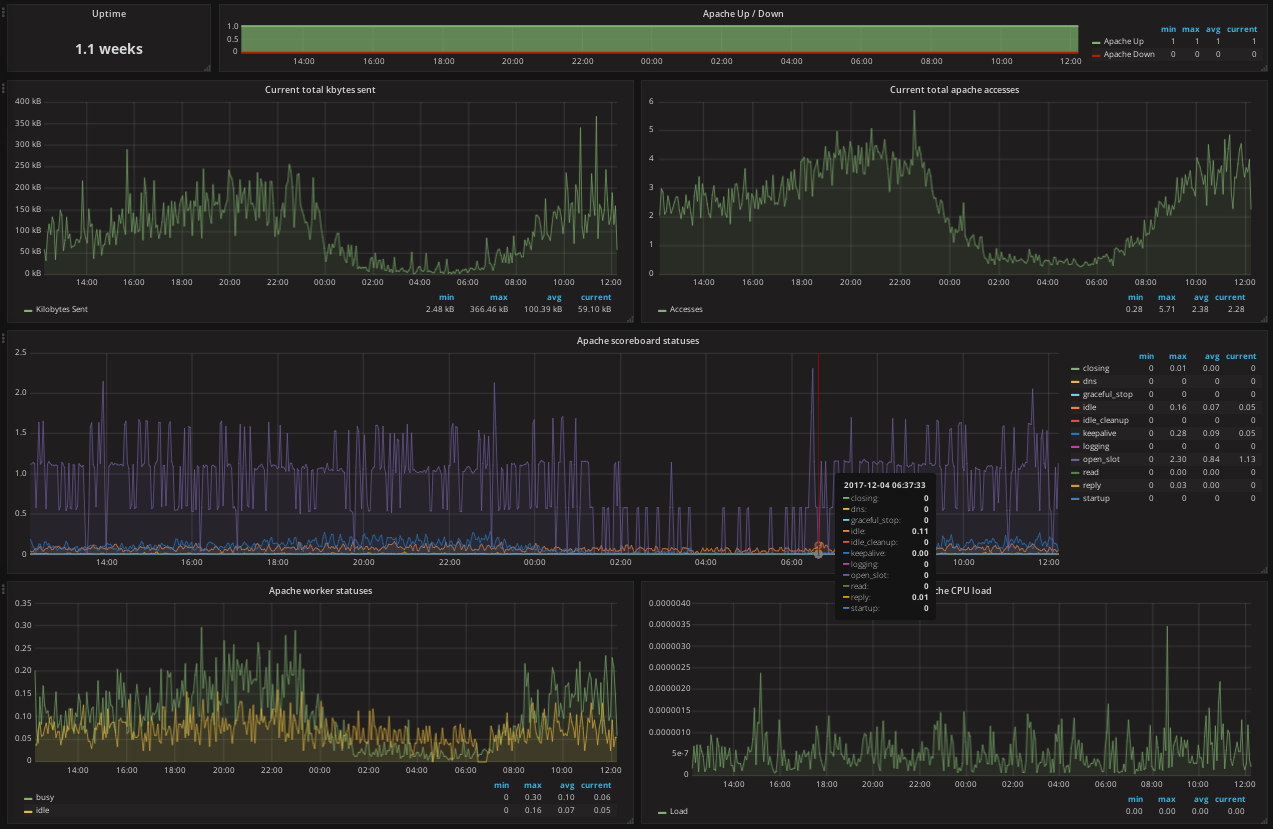

Example: server monitoring

Example: electrical consumption

Source: Analyze your electrical consumption using Warp10

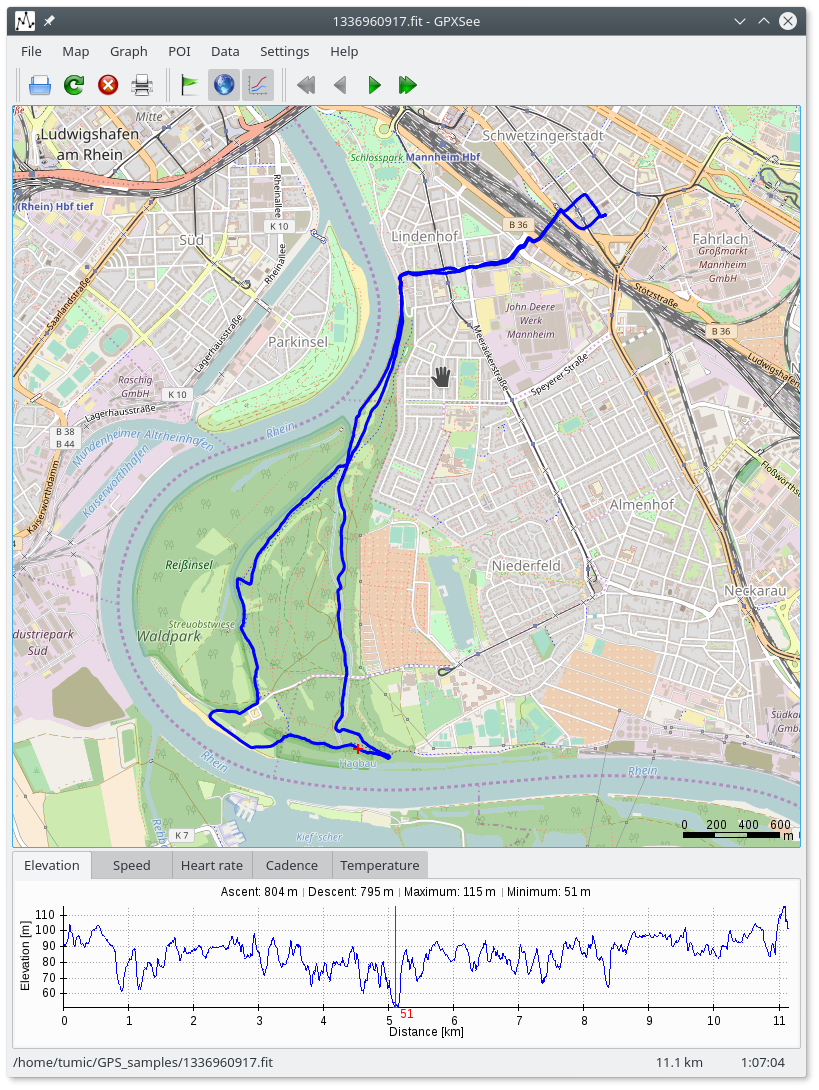

Example: geospatial time series

Source: Traffic data for Smart Cities

Definition of Time Series Data

Time series data can be defined as:

- a sequence of numbers representing the measurements of a variable at time intervals.

- identifiable by a source name or id and a metric name or id.

- possibly associated with tags

- consisting of {timestamp , value} tuples

- value: float most of the times, but can be any datatype

- raw data is immutable, unique and sortable

- possible extension: Geo-TimeSeries

Conventional database approach

Time Series can be stored in conventional databases (relational) - see "Point Based data model" - slide 41 of Temporal Databases lecture

| series_id | timestamp | value |

| s01 | 00:50:37 | 2.56 |

| s02 | 00:53:53 | 3.12 |

| s01 | 00:56:52 | 4.42 |

| s02 | 01:00:16 | 3.23 |

| s01 | 01:03:32 | 5.20 |

| s01 | 01:06:24 | 6.20 |

Database issues 1/2

- scalability issues

- volume (# of sensors, # of measures)

- frequency (1Hz -> 86400 measures/day, 1 MHz sensor (acoustics) -> 1e6 measures/second)

- common workload in time series : millions of entries per second

- OVH example : 1.5M datapoints/s, 24h/7, peaks at ~10M datapoints/s

- frequent write means frequent index updates/memory cache hitting - storage characteristics matter, see interesting discussion on Timescale blog.

Database issues 2/2

- query/analysis/transformation

- expressivity issues

- query performance

- query characteristics (large batches, downsampling…)

- uncommon analyses

⇒ need for specialized time series databases

Other database issues

Warm vs cold data

Storage/archival of cold data/timeseries.

- how to efficiently store it?

- how to efficiently query it?

Example from Time Series et santé: datalogger médical 100hz, retour d'expérience après un an:

- 2 year capture of intracranial pressure (100Hz) -> 10e9 datapoints

- use of HFile (compressed format for Warp10) for archiving data in a compressed way

Which database for which usage?

Global categories of databases:

- relational

- document stores (complex structures with different fields)

- column stores (key-value)

- object-oriented

- graph (relationships)

- triple stores (semantic database)

- Time Series

- …

## ** Case studies FIXME ## https://senx.io/cases-studies

Time-Series Database

A TSDB system is

- a container for a collection of multiple time series

- software system optimized for storing and querying arrays of numbers/values indexed by time, datetime or datetime range

- specialized for handling/processing time series data, taking into account their characteristic workload

Characteristic write workload

- write-mostly is the norm (95% to 99% of all workload)

- writes are almost always sequential appends

- writes to distant past or distant future are extremely rare

- updates are rare

- deletes happen in bulk

Characteristic read workload

- happen rarely

- are usually much larger than available memory (need for server-side processing)

- multiple reads are usually sequential ascending or descending

- reads of multiple series and concurrent reads are common (batch reading)

Characteristic usage

- monitoring

- explore/understand data

- data aggregation

- outlier/anomaly detection

- forecasting

- …

TSDB Designs

Based on these characteristics

- proper internal representation of time series

- distributed database options allow for more scalability than monolithic solutions

- server-side query processing is necessary

- memory caching/optimization

Storage implementation

- May be based on existing DBMS (Cassandra, HBase, CouchDB…) - or even relational DB - for storing data or metadata.

- May use its own data format (Time Structured Merge Tree for InfluxDB)

- May focus on compression to store more data in memory (Gorilla by Facebook)

TSDB Design - Wide tables

One row/doc per time period, columns are samples

| series_id | start | t+1 | t+2 | t+3 | … |

| s01 | 00:00:10 | 2.56 | 3.12 | 4.42 | … |

| s02 | 00:00:10 | 4.12 | 5.12 | 6.12 | … |

| s01 | 00:00:20 | 4.23 | 4.44 | 4.76 | … |

…

TSDB Design - Hybrid tables

One row/doc per time period, completed lines are stored as BLOB.

| series_id | start | t+1 | t+2 | t+3 | … | compressed |

| s01 | 00:00:10 | … | {…} | |||

| s02 | 00:00:10 | … | {…} | |||

| s01 | 00:00:20 | 4.23 | 4.44 | … |

…

TSDB Design - Direct BLOB insertion

Usually with memory cache.

| series_id | start | data |

| s01 | 00:00:10 | {…} |

| s02 | 00:00:10 | {…} |

| s01 | 00:00:20 | {…} |

…

Some optimizations

- Pre-aggregation: pre-compute common aggregation with common granularities - days, months, etc

- Use a custom data format as input: json, protobuf, custom format (warp10.io, Influx Line Protocol)…

Retention policies

- Round-robin tables approaches (RRDTool, Graphite): keep only a round-robin buffer of data. Use a fixed-size storage.

- InfluxDB allows to configure the retention policy (duration, replication, shard group duration)

Some existing solutions

See also list of TSDB

OpenTSDB

- OpenTSDB

- Open Source (LGPL)

- Hadoop/HBase backend (requires HBase)

- Direct BLOB insertion

- REST API

- Milliseconds timestamps

OpenTSDB - Writing data

Inserting values into the database:

put <metric> <timestamp> <value> <tagk1=tagv1[ tagk2=tagv2 ...tagkN=tagvN]>

For instance:

put sys.cpu.user 1356998400 42.5 host=webserver01 cpu=0

OpenTSDB - Series identifier

- A series key is a combination of metric name (

sys.cpu.user) and tag values (host/cpu). - Every time series in OpenTSDB must have at least one tag.

- Offers rapid aggregation through queries,

e.g.

sum:sys.cpu.user{host=webserver01,cpu=42}orsum:sys.cpu.user{host=webserver01}

OpenTSDB queries principle

- Filtering

- Grouping

- Downsampling

- Interpolation

- Aggregation

- Functions/Expressions

InfluxDB

- InfluxDB

- Open Source (monolithic version)

- No external dependency (Go en 2.x, Rust en 3.x)

- Custom TSMTree storage (Time Structured Merged Trees)

- Nanoseconds timestamps

- REST API, CLI tool, language bindings

- Previously SQL-like query language, moved to Flux (functional script language)

InfluxDB - Writing data

Using Line Protocol:

<measurement>[,<tag-key>=<tag-value>...] \ <field-key>=<field-value>[,<field2-key>=<field2-value>...] \ [unix-nano-timestamp]

Example:

cpu,host=serverA,region=us_west value=0.64 payment,device=mobile,product=Notepad,method=credit billed=33,licenses=3 1434067467100293230 stock,symbol=AAPL bid=127.46,ask=127.48 temperature,machine=unit42,type=assembly external=25,internal=37 1434067467000000000

Older InfluxQL query language

SQL-inspired - Example:

SELECT MEAN("water_level")

FROM "h2o_feet"

WHERE "location"='santa_monica'

AND time >= '2015-09-18T21:30:00Z'

AND time <= now()

GROUP BY time(1h) fill(none)

New Flux language (v2)

- Functional data scripting language

- Supports multiple data sources:

- Time series databases (InfluxDB)

- Relational SQL databases

- CSV

- Principle: Retrieve / Filter / Process / Return

Flux example

// Sample query from(bucket: "noaa") |> range(start: 2015-09-18, end: 2015-10-18) |> filter(fn: (r) => r._measurement == "h2o_feet") |> filter(fn: (r) => r.location == "santa_monica") |> filter(fn: (r) => r._field == "water_level") |> aggregateWindow(every: 1h, fn: mean ) |> yield(name: "Mean")

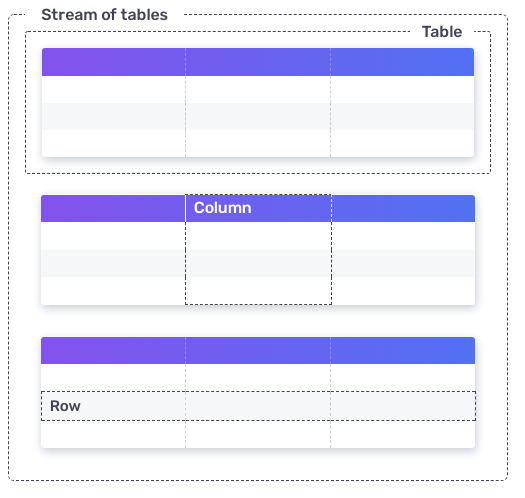

Flux data model

- stream of tables: collection of tables

- table: collection of columns partitioned by group key

- column: collection of values of the same basic type

- row: collection of associated column values

Flux functions (aggregations, selectors) use stream of tables as input and output

Query structure

InfluxDB platform

Platform for collecting, storing, graphing, and alerting on time series data

- Telegraf: metrics collection agent

- InfluxDB:

- Storage agent

- Embedded web-based UI

- Metrics and events processing and alerting engine (notifications)

Warp10.io

- Warp10

- Dedicated to high-volume GeoTime Series (GTS) handling

- Collection, storage and analysis of GTS

- Open-source (Apache 2.0 OSL)

- Server-side analysis scripts

- Storage through LevelDB (standalone) or HBase (distributed)

Warp10.io - writing data

- POST queries to an ingress endpoint

- Encoding: TS/LAT:LON/ELEV CLASS{LABELS} VALUE

- Time precision: from ns to ms

- 5 value types: long, double, boolean, string, binary

POST /api/v0/update HTTP/1.1

Host: host

X-Warp10-Token: TOKEN

Content-Type: text/plain

1380475081000000// foo{label0=val0,label1=val1} 123

/48.0:-4.5/ bar{label0=val0} 3.14

1380475081123456/45.0:-0.01/10000000 foobar{label1=val1} T

Warp10.io - WarpScript

- Expressive query language (fully functional, Turing-complete)

- 6 high-level TS operations: BUCKETIZE, MAP, REDUCE, FILL, APPLY, FILTER

- RPN-inspired syntax for WarpScript (stack-based)

- Alternative syntax FLoWS

- Output format: compact JSON objects

Warp10.io - WarpScript example

'TOKEN_READ' 'token' STORE // Storing token

[ $token ‘consumption’ {} NOW 1 h ] FETCH // Fetch all values from now to 1 hour ago

[ SWAP bucketizer.max 0 1 m 0 ] BUCKETIZE // Get max value for each minute

[ SWAP [ 'room' ] reducer.sum ] REDUCE // Aggregate all consumptions by room

[ SWAP mapper.rate 1 0 0 ] MAP // Consumption being a counter, compute the rate

Warp10 architecture

- Standalone version (single jar, leveldb)

- Standalone with datalog replication or sharding

- Distributed version: Kafka for messaging, HBase for storage

Warp10 test

- Create a sandbox server on http://sandbox.senx.io/

- Up to 10k series

Visualisation interfaces

InfluxDB Giraffe

Kibana

- Part of the ELK stack (Elasticsearch)

- Generic dashboard but with time-focused components

Grafana

Grafana (Graphite, InfluxDB, OpenTSDB, Prometheus…)

Initially fork of Kibana

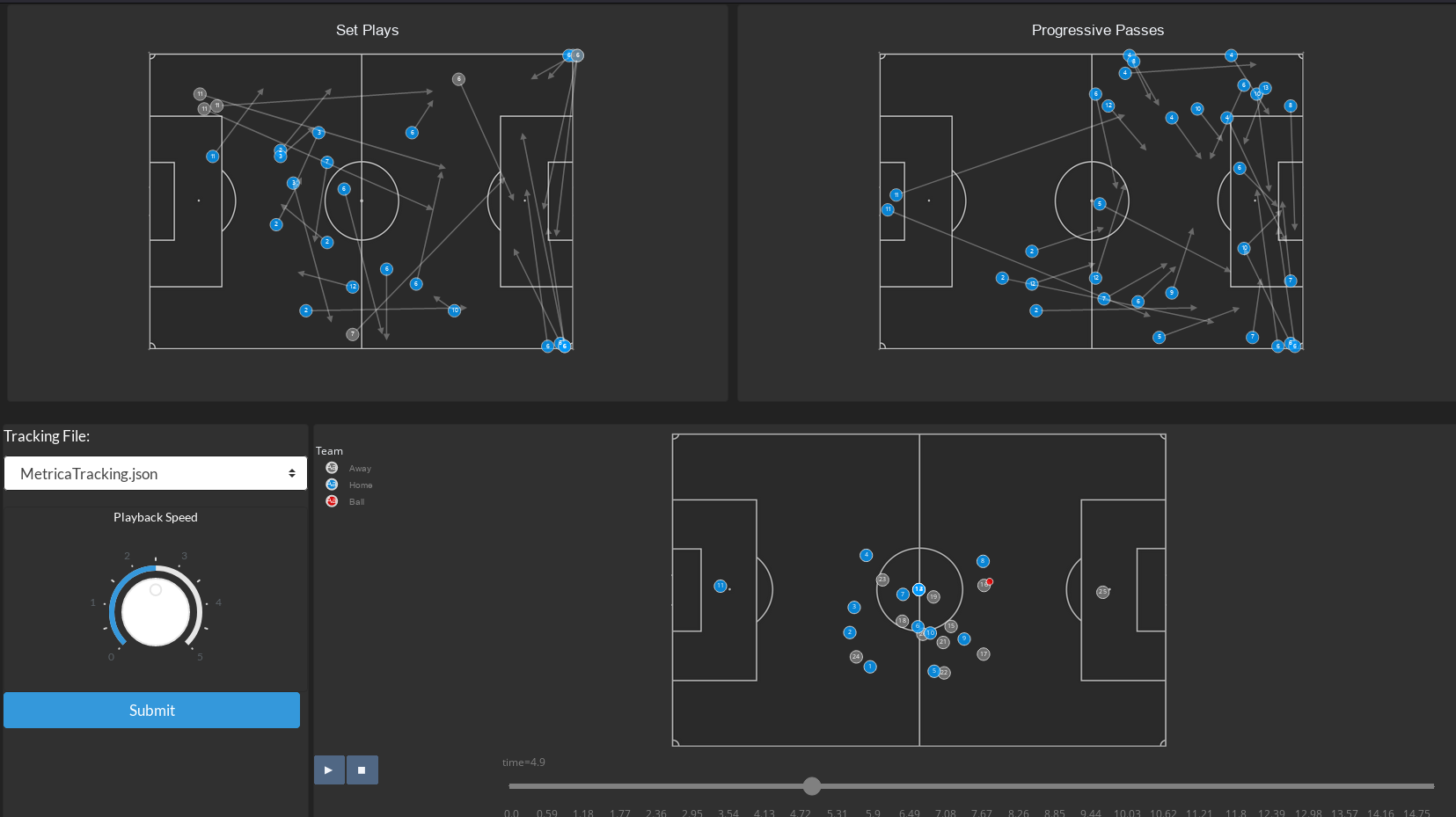

Dash (PlotLy)

Dashboard creation platform https://plotly.com/dash/

For instance soccer game viz

Other visualizations

Example dynamic visualization: NBA Data visualisation (Source)

For reference/inspiration - Timeviz Browser: https://browser.timeviz.net/

ObservableHQ notebooks on Time Series viz

References

- Time Series Database Query Languages (Philipp Bende)

- Survey and Comparison of Open Source Time Series Databases (Andreas Bader, Oliver Kopp, Michael Falkenthal)

- InfluxDB comparison with other TSDBs

- Interesting comparison of different TSDBs

- Time Series France - commmunauté francophone sur les séries temporelles, de la collecte de la donnée jusqu'à son exploitation

- Awesome Time Series - collection of resources for working with time series data

- https://timeseriesclassification.com/ (datasets + code)